Siddhant Shrivastava

July 31, 2015

Filed under “GSoC”

Hi! The past week was a refreshingly positive one. I was able to solve some of the insidious issues that were plaguing the efforts that I was putting in last week.

Virtual Machine Networking issues Solved!

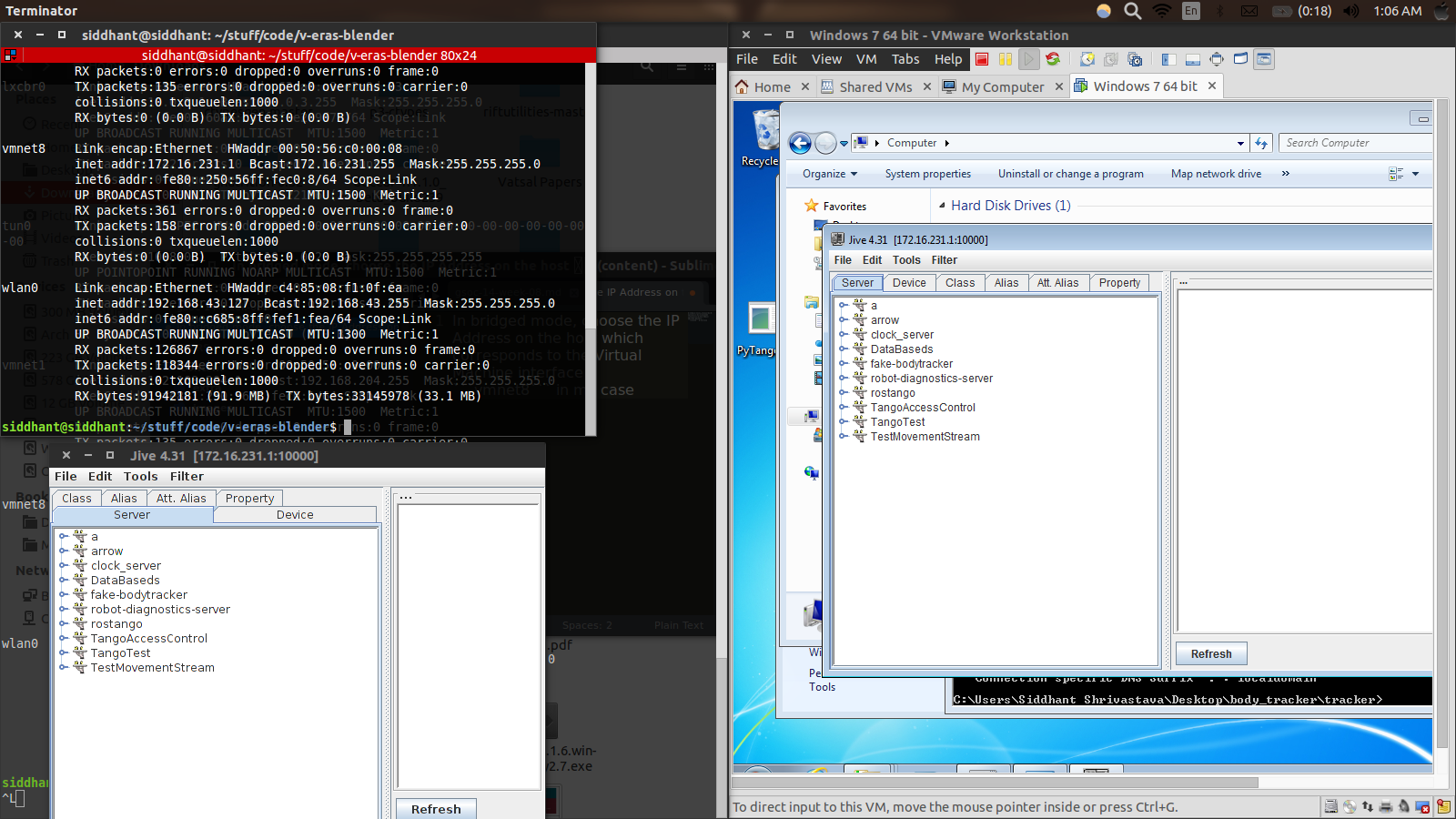

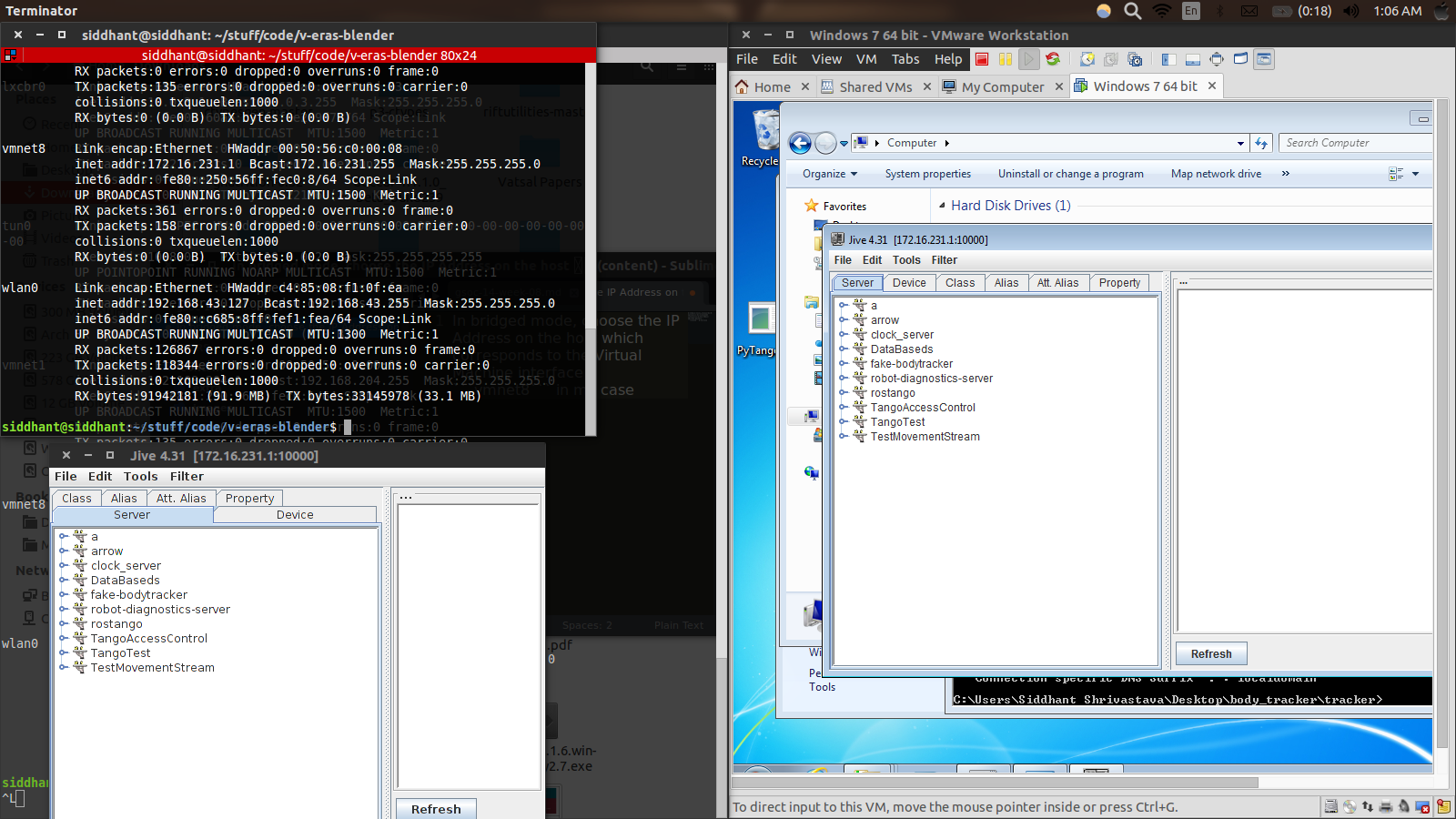

I was able to use the Tango server across the Windows 7 Virtual Machine and the Tango Host on my Ubuntu 14.04 Host Machine. The proper Networking mode for this turns out to be Bridged Networking mode which basically tunnels a connection between the Virtual Machine and the host.

In the bridged mode, the Virtual Machine exposes a Virtual Network interface with its own IP Address and Networking stack. In my case it was vm8 with an IP Address different from the IP Address patterns that were used by the real Ethernet and WiFi Network Interface Cards. Using bridged mode, I was able to maintain the Tango Device Database server on Ubuntu and use Vito’s Bodytracking device on Windows. The Virtual Machine didn’t slow down things by any magnitude while communicating across the Tango devices.

This image explains what I’m talking about -

In bridged mode, I chose the IP Address on the host which corresponds to the Virtual Machine interface - vmnet8 in my case. I used the vmnet8 interface on Ubuntu and a similar interface on the Windows Virtual Machine. I read quite a bit about how Networking works in Virtual Machines and was fascinated by the Virtualization in place.

Bodytracking meets Telerobotics

With Tango up and running, I had to ensure that Vito’s Bodytracking application works on the Virtual Machine. To that end, I installed Kinect for Windows SDK, Kinect Developer Tools, Visual Python, Tango-Controls, and PyTango. Setting a new virtual machine up mildly slowed me down but was a necessary step in the development.

Once I had that bit running, I was able to visualize the simulated Martian Motivity walk done in Innsbruck in a training station. The Bodytracking server created by Vito published events corresponding to the moves attribute which is a list of the following two metrics -

I was able to read the attributes that the Bodytracking device was publishing by subscribing to Event Changes to that attribute. This is done in the following way -

while TRIGGER:

# Subscribe to the 'moves' event from the Bodytracking interface

moves_event = device_proxy.subscribe_event(

'moves',

PyTango.EventType.CHANGE_EVENT,

cb, [])

# Wait for at least REFRESH_RATE Seconds for the next callback.

time.sleep(REFRESH_RATE)

This ensures that the Subscriber doesn’t exhaust the polled attributes at a rate faster than they are published. In that unfortunate case, an EventManagerException occurs which must be handled properly.

Note the cb attribute, it refers to the Callback function that is triggered when an Event change occurs. The callback function is responsible for reading and processing the attributes.

The processing part in our case is the core of the Telerobotics-Bodytracking interface. It acts as the intermediary between Telerobotics and Bodytracking - converting the position, and orientation values to linear and angular velocity that Husky can understand. I use a high-performance container from the collections class known as deque. It can act both as a stack and a queue using deque.append, deque.appendleft, deque.pop, deque.popleft.

To calculate velocity, I compute the differences between consecutive events and their corresponding timestamps. The events are stored in a deque, popped when necessary and subtracted from the current event values

For instance this is how linear velocity processing takes place -

# Position and Linear Velocity Processing

position_previous = position_events.pop()

position_current = position

linear_displacement = position_current - position_previous

linear_speed = linear_displacement / time_delta

ROS-Telerobotics Interface

We are halfway through the Telerobotics-Bodytracking architecture. Once the velocities are obtained, we have everything we need to send to ROS. The challenge here is to use velocities which ROS and the Husky UGV can understand. The messages are published ot ROS only when there is some change in the velocity. This has the added advantage of minimzing communication between ROS and Tango. When working with multiple distributed systems, it is always wise to keep the communication between them minimial. That’s what I’ve aimed to do. I’ll be enhacing the interface even further by adding Trigger Overrides in case of an emergency situation. The speeds currently are not ROS-friendly. I am writing a high-pass and low-pass filter to limit the velocities to what Husky can sustain. Vito and I will be refining the User Step estimation and the corresponding Robot movements respectively.

GSoC is only becoming more exciting. I’m certain that I will be contributing to this project after GSoC as well. The Telerobotics scenario is full of possibilities, most of which I’ve tried to cover in my GSoC proposal.

I’m back to my university now and it has become hectic but enjoyably challenging to complete this project. My next post will hopefully be a culmination of the Telerobotics/Bodytracking interface and the integration of 3D streaming with Oculus Rift Virtual Reality.

Ciao!

Siddhant Shrivastava

July 24, 2015

Filed under “GSoC”

Hi! For the past couple of weeks, I’ve been trying to get a lot of things to work. Linux and Computer Networks seem to like me so much that they ensure my attention throughout the course of this program. This time it was dynamic libraries, Virtual Machine Networking, Docker Containers, Head-mounted display errors and so on.

A brief discussion about these:

Dynamic Libraries, Oculus Rift, and Python Bindings

Using the open-source Python bindings for the Oculus SDK available here, Franco and I ran into a problem -

ImportError: <root>/oculusvr/linux-x86-64/libOculusVR.so: undefined symbol: glXMakeCurrent

To get to the root of the problem, I tried to list all dependencies of the shared object file -

linux-vdso.so.1 => (0x00007ffddb388000)

librt.so.1 => /lib/x86_64-linux-gnu/librt.so.1 (0x00007f6205e1d000)

libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007f6205bff000)

libX11.so.6 => /usr/lib/x86_64-linux-gnu/libX11.so.6 (0x00007f62058ca000)

libXrandr.so.2 => /usr/lib/x86_64-linux-gnu/libXrandr.so.2 (0x00007f62056c0000)

libstdc++.so.6 => /usr/lib/x86_64-linux-gnu/libstdc++.so.6 (0x00007f62053bc000)

libm.so.6 => /lib/x86_64-linux-gnu/libm.so.6 (0x00007f62050b6000)

libgcc_s.so.1 => /lib/x86_64-linux-gnu/libgcc_s.so.1 (0x00007f6204ea0000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f6204adb000)

/lib64/ld-linux-x86-64.so.2 (0x00007f6206337000)

libxcb.so.1 => /usr/lib/x86_64-linux-gnu/libxcb.so.1 (0x00007f62048bc000)

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007f62046b8000)

libXext.so.6 => /usr/lib/x86_64-linux-gnu/libXext.so.6 (0x00007f62044a6000)

libXrender.so.1 => /usr/lib/x86_64-linux-gnu/libXrender.so.1 (0x00007f620429c000)

libXau.so.6 => /usr/lib/x86_64-linux-gnu/libXau.so.6 (0x00007f6204098000)

libXdmcp.so.6 => /usr/lib/x86_64-linux-gnu/libXdmcp.so.6 (0x00007f6203e92000)

undefined symbol: glXMakeCurrent (./libOculusVR.so)

undefined symbol: glEnable (./libOculusVR.so)

undefined symbol: glFrontFace (./libOculusVR.so)

undefined symbol: glDisable (./libOculusVR.so)

undefined symbol: glClear (./libOculusVR.so)

undefined symbol: glGetError (./libOculusVR.so)

undefined symbol: glXDestroyContext (./libOculusVR.so)

undefined symbol: glXCreateContext (./libOculusVR.so)

undefined symbol: glClearColor (./libOculusVR.so)

undefined symbol: glXGetCurrentContext (./libOculusVR.so)

undefined symbol: glXSwapBuffers (./libOculusVR.so)

undefined symbol: glColorMask (./libOculusVR.so)

undefined symbol: glBlendFunc (./libOculusVR.so)

undefined symbol: glBindTexture (./libOculusVR.so)

undefined symbol: glDepthMask (./libOculusVR.so)

undefined symbol: glDeleteTextures (./libOculusVR.so)

undefined symbol: glGetIntegerv (./libOculusVR.so)

undefined symbol: glXGetCurrentDrawable (./libOculusVR.so)

undefined symbol: glDrawElements (./libOculusVR.so)

undefined symbol: glTexImage2D (./libOculusVR.so)

undefined symbol: glXGetClientString (./libOculusVR.so)

undefined symbol: glDrawArrays (./libOculusVR.so)

undefined symbol: glGetString (./libOculusVR.so)

undefined symbol: glXGetProcAddress (./libOculusVR.so)

undefined symbol: glViewport (./libOculusVR.so)

undefined symbol: glTexParameteri (./libOculusVR.so)

undefined symbol: glGenTextures (./libOculusVR.so)

undefined symbol: glFinish (./libOculusVR.so)

This clearly implied one thing - libGL was not being linked. My task then was to somehow link libGL to the SO file that came with the Python Bindings. I tried out the following two options -

- Creating my own bindings: Tried to regenerate the SO file from the Oculus C SDK by using the amazing Python Ctypesgen. This method didn’t work out as I couldn’t resolve the header files that are requied by Ctypesgen. Nevertheless, I learned how to create Python Bindings and that is a huge take-away from the exercise. I had always wondered how Python interfaces are created out of programs written in other languages.

- Making the existing shared object file believe that it is linked to libGL: So here’s what I did - after a lot of searching, I found the nifty little environment variable that worked wonders for our Oculus development - LD_PRELOAD

As this and this articles delineate the power of LD_PRELOAD, it is possible to force-load a dynamically linked shared object in the memory.

If you set LD_PRELOAD to the path of a shared object, that file will be loaded before any other library (including the C runtime, libc.so). For example, to run ls with your special malloc() implementation, do this:

$ LD_PRELOAD=/path/to/my/malloc.so /bin/ls

Thus, the solution to my problem was to place this in the .bashrc file -

LD_PRELOAD="/usr/lib/x86_64-linux-gnu/libGL.so"

This allowed Franco to create the Oculus Test Tango server and ensured that our Oculus Rift development efforts continue with gusto.

ROS and Autonomous Navigation

On the programming side, I’ve been playing around with actionlib to interface Bodytracking with Telerobotics. I have created a simple walker script which provides a certain degree of autonomy to the robot and avoids collissions with objects to override human teleoperation commands. An obstacle could be a Martian rock in a simulated environment or an uneven terrain with a possible ditch ahead. To achieve this, I use the LaserScan message and check for the range readings at frequent intervals. The LIDAR readings ensure that the robot is in one of the following states -

- Approaching an obstacle

- Going away from an obstacle

- Hitting an obstacle

The state can be inferred from the LaserScan Messages. A ROS Action Server then waits for one of these events to happen and triggers the callback which tells the robot to stop, turn and continue.

Windows and PyKinect

In order to run Vito’s bodytracking code, I needed a Windows installation. Running into problems with a 32-bit Windows 7 Virtual Machine image I had, I needed to reinstall and use a 64-bits Virtual Machine image. I installed all the dependencies to run the bodytracking code. I am still stuck with Networking modes between the Virtual Machine and the Host machine. The TANGO host needs to be configured correctly to allow the TANGO_MASTER to point to the host and the TANGO_HOST to the virtual machine.

Docker and Qt Apps

Qt applications don’t seem to work with sharing the display in a Docker container. The way out is to create users in the Docker container which I’m currently doing. I’ll enable VNC and X-forwarding to allow the ROS Qt applications to work so that the other members of the Italian Mars Society can use the Docker container directly.

Gazebo Mars model

I took a brief look at the 3D models of Martial terrain available for free use on the Internet. I’ll be trying to obtain the Gale Crater region and represent it in Gazebo to drive the Husky in a Martian Terrain.

Documentation week!

In addition to strong-arming my CS concepts against the Networking and Linux issues that loom over the project currently, I updated and added documentation for the modules developed so far.

Hope the next post explains how I solved the problems described in this post. Ciao!