Mid-term Report - GSoC '15

Siddhant Shrivastava

July 01, 2015

Filed under “GSoC”

Hi all! I made it through the first half of the GSoC 2015 program. This is the evaluation week of the Google Summer of Code 2015 program with the Python Software Foundation and the Italian Mars Society ERAS Project. Mentors and students evaluate the journey so far in the program by answering some questions about their students and mentors respectively. On comparing with the timeline, I reckoned that I am on track with the project so far.

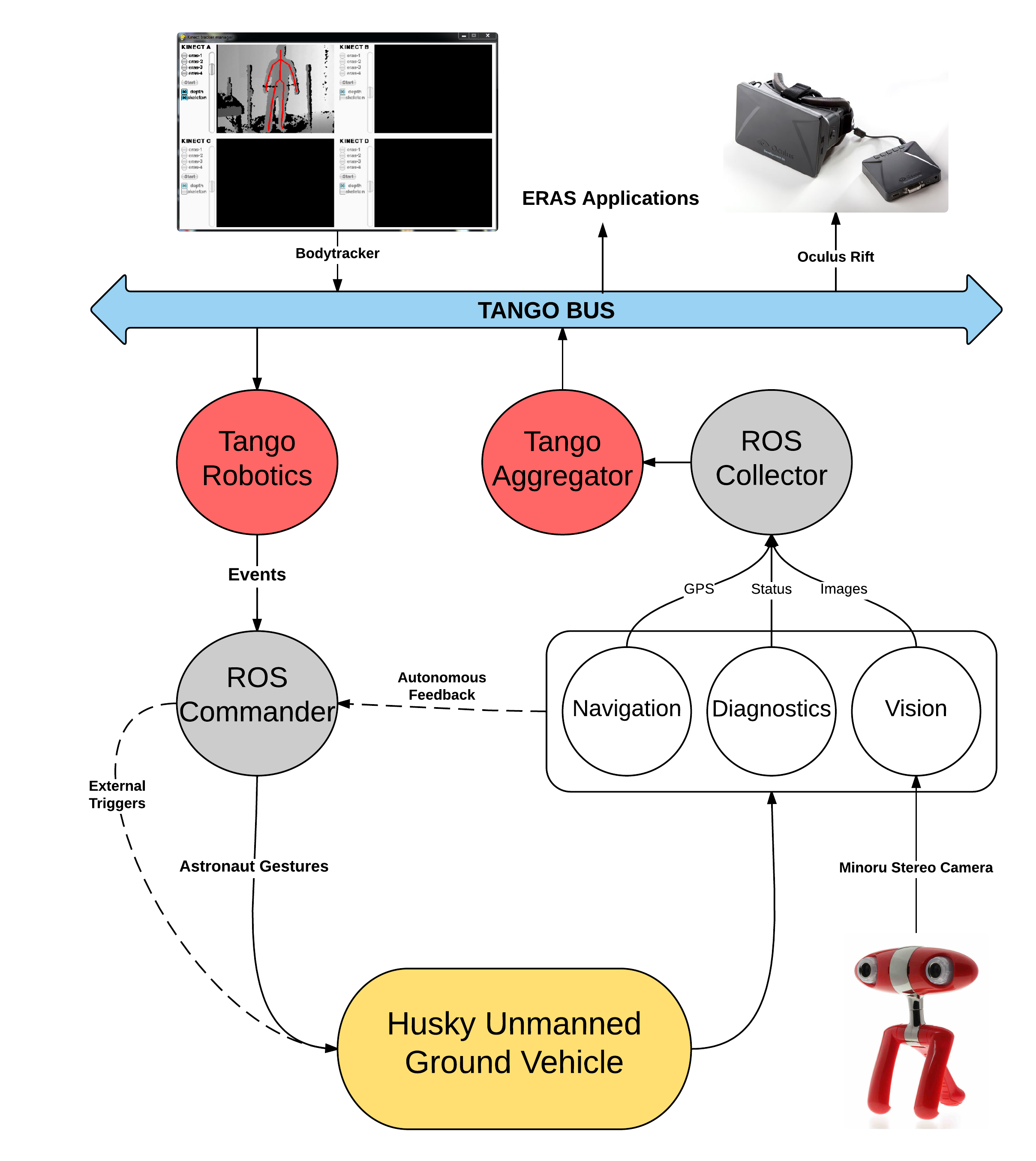

The entire Telerobotics with Virtual Reality project can be visualized in the following diagram -

Achievements-

Husky-ROS-Tango Interface

- ROS-Tango interfaces to connect the Telerobotics module with the rest of ERAS.

-

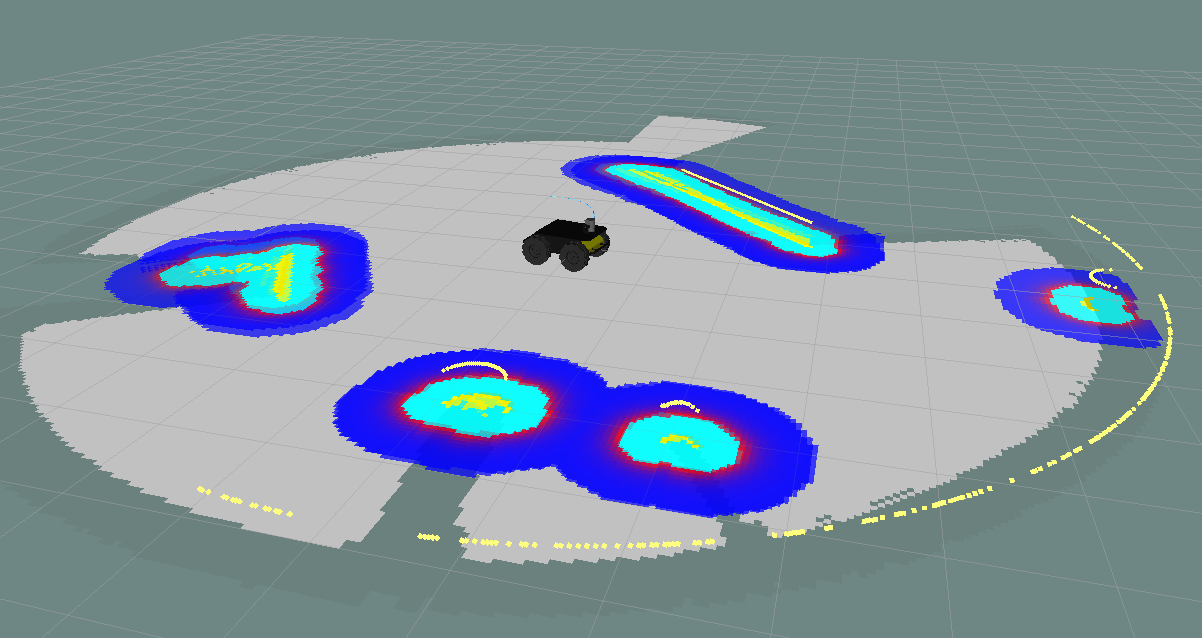

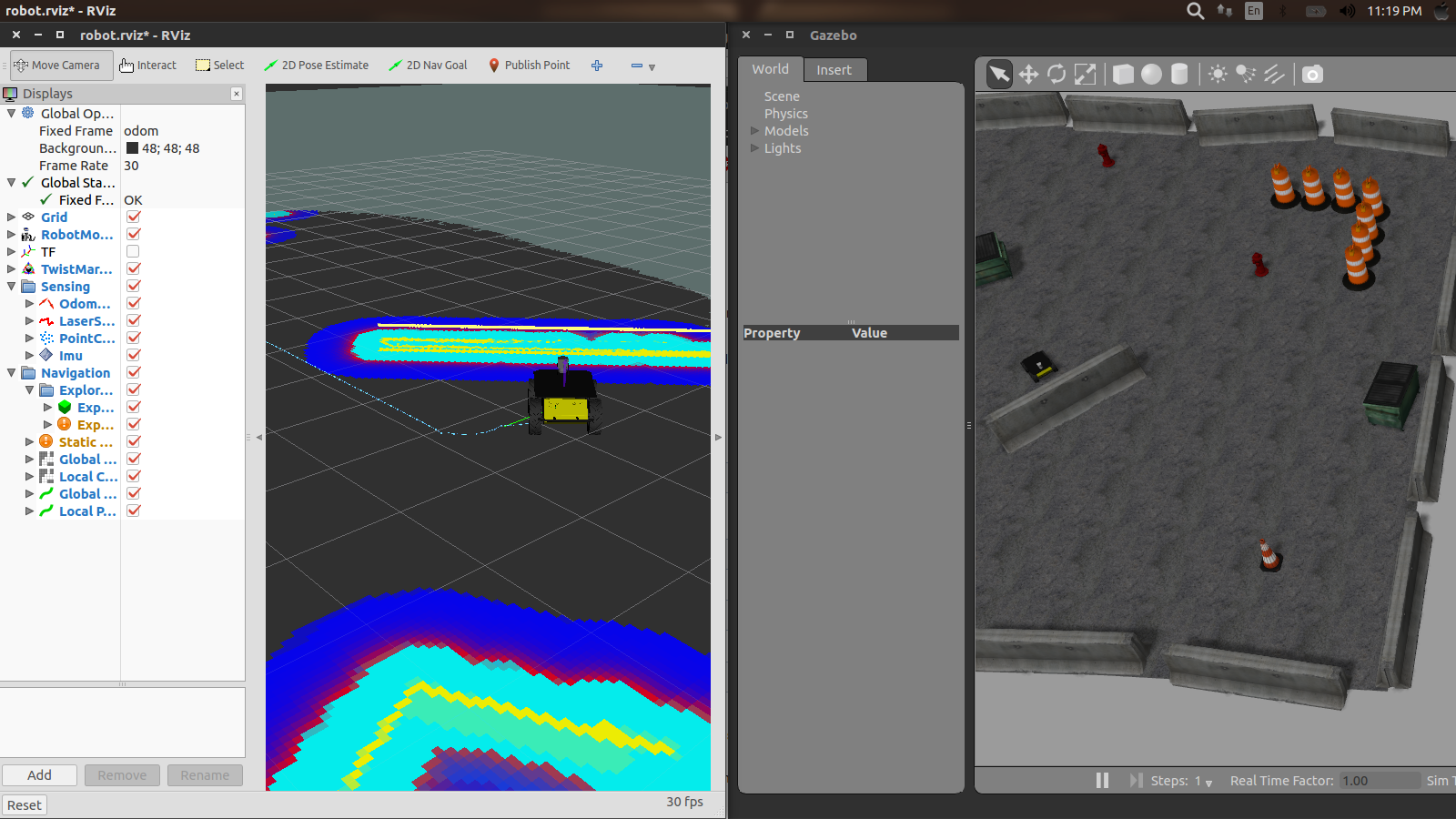

ROS Interfaces for Navigation and Control of Husky

-

Logging Diagnostics of the robot to the Tango Bus

- Driving the Husky around using human commands

Video Streaming

- Single Camera Video streaming to Blender Game Engine

This is how it works. ffmpeg is used as the streaming server to which Blender Game Engine subscribes.

The ffserver.conf file is configured as follows which describes the characterstics of the stream:

Port 8190

BindAddress 0.0.0.0

MaxClients 10

MaxBandwidth 50000

NoDaemon

<Feed webcam.ffm>

file /tmp/webcam.ffm

FileMaxSize 2000M

</Feed>

<Stream webcam.mjpeg>

Feed webcam.ffm

Format mjpeg

VideoSize 640x480

VideoFrameRate 30

VideoBitRate 24300

VideoQMin 1

VideoQMax 5

</Stream>

Then the Blender Game Engine and its associated Python library bge kicks in to display the stream on the Video Texture:

# Get an instance of the video texture

bge.logic.video = bge.texture.Texture(obj, ID)

# a ffmpeg server is streaming the feed on the IP:PORT/FILE

# specified in FFMPEG_PARAM,

# BGE reads the stream from the mjpeg file.

bge.logic.video.source = bge.texture.VideoFFmpeg(FFMPEG_PARAM)

bge.logic.video.source.play()

bge.logic.video.refresh(True)

The entire source code for single camera streaming can be found in this repository.

- Setting up the Minoru Camera for stereo vision

It turns out this camera can stream at 30 frames per second for both cameras. The last week has been particularly challenging to figure out the optimal settings for the Minoru Webcam to work. It depends on the Video Buffer Memory allocated by the Linux Kernel for libuvc and v4l2 compatible webcams. Different kernel versions result in different performances. It is inefficient to stream the left and right cameras at a frame rate greater than 15 fps with the kernel version that I am using.

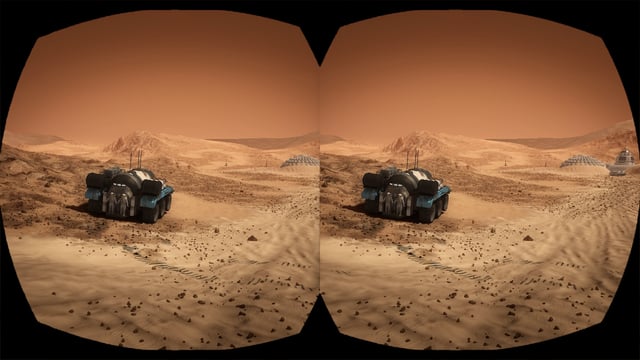

- Setting up the Oculus Rift DK1 for the Virtual Reality work in the upcoming second term

Crash-testing and Roadblocks

This project was not without its share of obstacles. A few memorable roadblocks come to mind-

-

Remote Husky testing - Matt (from Canada), Franco (from Italy), and I (from India) tested whether we could remotely control Husky. The main issue we faced was Network Connectivity. We were all on different networks geographically, which the ROS in our machines could not resolve. Thus some messages (like GPS) were accessible whereas the others (like Husky Status messages) were not. The solution we sought is to create a Virtual Private Network for our computers for future testing.

-

Minoru Camera Performance differences - Since the Minoru’s performance varies with the Kernel version, I had to bump down the frames per second to 15 fps for both cameras and stream them in the Blender Game Engine. This temporary hack should be resolved as ERAS moves to newer Linux versions.

-

Tango related - Tango-controls is a sophisticated piece of SCADA library with a server database for maintaining device server lists. It was painful to use the provided GUI - Jive to configure the device servers. To make the process in line with other development activities, I wrote a little CLI-based Device server registration and de-registration interactive script. A blog post which explains this in detail.

-

Common testing platform - I needed to use ROS Indigo, which is supported only on Ubuntu 14.04. ERAS is currently using Ubuntu 14.10. In order to enable Italian Mars Society and the members to execute my scripts, they needed my version of Ubuntu. Solution - Virtual Linux Containers. We are using a Docker Image which my mentors can use on their machine regarding of their native OS. This post explains this point.

Expectations from the second term

This is a huge project in that I have to deal with many different technologies like -

- Robot Operating System

- FFmpeg

- Blender Game Engine

- Oculus VR SDK

- Tango-Controls

So far, the journey has been exciting and there has been a lot of learning and development. The second term will be intense, challenging, and above all, fun.

To-do list -

- Get Minoru webcam to work with ffmpeg streaming

-

Use Oculus for an Augmented Reality application

Source

Source -

Integrate Bodytracking with Telerobotics

- Automation in Husky movement and using a UR5 manipulator

- Set up a PPTP or OpenVPN for ERAS

Time really flies by fast when I am learning new things. GSoC so far has taught me how to not be a bad software engineer, but also how to be a good open source community contributor. That is what the spirit of Google Summer of Code is about and I have imbibed a lot. Besides, working with the Italian Mars Society has also motivated me to learn the Italian language. So Python is not the only language that I’m practicing over this summer ;)

Here’s to the second term of Google Summer of Code 2015!

Ciao :)